Introduction to Virtual Diplomacy

The mechanics of power in the age of "countries of geniuses in a datacenter."

- Governance

- Verification

- Capabilities

Thanks to Adam Gleave, Jan Kirchner, Jarrah Bloomfield, and Siméon Campos for feedback on earlier drafts.

AI is posing a range of emerging challenges, from labor market disruption to dual-use capabilities proliferation. We propose "virtual embassies" as a concrete mechanism for enabling proportional policy initiatives. These are programs meant to help third-parties answer questions about capability consumption, such as: "How much autonomous research has this organization consumed internally?" or "How much autonomous hacking has this organization sold to customers in this jurisdiction?" Following an overview of sample policies enabled by this mechanism, we provide an evidence-based assessment of the technical readiness of its key components, and contrast it with other active efforts targeting these emerging challenges.

Background Context

Extrapolating the current trends in the development of AI has led some to picture the technology's future as equivalent to "countries of geniuses in a datacenter." These represent virtual populations which have deeply internalized all of our collective knowledge, whose thought processes are significantly faster than that of our own, and whose members can be directly instantiated into intellectual maturity. To sense the plausibility of this trajectory more clearly, think of the encyclopedic knowledge of popular language models, the speed at which they tend to articulate their thoughts, and the fact that they boil down to files which can be endlessly copied. We can now predictably convert energy into intelligence — from here on, it is only a matter of improving the conversion rate and increasing the throughput.

Despite the virtual nature of these populations, they may have a very material impact on national security and the labor market. More subtly, they may pose the greatest challenge to our understanding of the world and our place in it since Copernicus. The rest of this document articulates a vision for how to peacefully navigate the transition to a "new multipolarity," an international dynamic of a world whose heterogeneity would make our contemporary intercultural disputes look quaint in comparison. Despite these lofty ambitions, the vision described below is grounded in the concrete development of technical infrastructure meant to strike at the core of the issue. This document is organized as follows:

-

Emerging Challenges. This section addresses the specific issues being tackled by this line of work, while implicitly highlighting the ones not being addressed.

-

Proposed Infrastructure. This section provides an accessible account of the mechanism meant to support policy initiatives which address the previously discussed challenges.

-

Policy Initiatives. This section connects the previous two and adds substance to the concrete approaches meant to address the emerging challenges.

-

Technical Readiness. This section dissects the proposed infrastructure into key components and estimates the maturity of each based on evidence.

-

Other Approaches. This section provides a brief taster of alternative efforts meant to address the emerging challenges and contrasts them with the proposed approach.

Finally, we conclude by laying out a path forward focused on technical readiness and policy engagement, helping shape the transition to the new multipolarity.

Emerging Challenges

Instead of trying to make the case for the plausibility of particular concerns — a goal better served by other resources — this section focuses on listing the specific issues motivating this work, with key ideas only included for completeness:

-

Instability from labor market disruption. Jobs that could be done entirely remotely account for almost half of US wages, with the proportion of jobs that can be done entirely remotely being even higher in the UK and several EU countries. At the same time, running inference using language models of a set caliber is getting more than an order of magnitude cheaper per year. On the manual labor front, one provider plans on scaling mass manufacturing of humanoid robots by more than an order of magnitude over an year. If sustained, these trends would be virtually guaranteed to erase the economic value of most types of human labor, and so rattle a load-bearing pillar of how modern society is organized.

-

Instability from dual-use capabilities. Just as the faculties of some of the brightest minds have historically been channeled towards the development of a dizzying array of weaponry, so may these emerging "countries of geniuses in a datacenter" be capable of developing means of causing damage to digital, biological, and social systems. Language models have already been observed to identify rudimentary vulnerabilities, possess knowledge of obscure areas in biology, and craft convincing rhetoric. These systems, either based on directions from humans or based on their own reasoning, may soon pose a non-trivial threat to national security.

-

Instability from automated research. Many of the flavors of intellectual labor necessary to develop these systems in the first place happen to be especially well-suited for automation. For instance, the development of efficient kernels to run on hardware accelerators can be objectively measured relative to "speed of light," the theoretical maximum performance. By rewarding models for code which yields improvements in the conversion rate of energy into intelligence along such axes, more intelligence can be minted from the same energy budget, intelligence which can then be channeled towards yet more improvements, ad nauseam. Therefore, while not concerning in and of itself, automated research may act as a catalyst for the previous issues.

-

Instability from nested arms races. The potential of future models to act as drop-in generators of economically valuable labor, as well as their strategic relevance from a national security point of view, is arguably fueling two interconnected arms races. First, companies are racing to package these "countries of geniuses in a datacenter" as value-generating assets, and monetize the labor extracted from them as a commoditized service. Second, besides seeking these economic cornucopias, governments with enough situational awareness are also racing to maintain their military edge in wielding dual-use capabilities. Add to this the fragile climate of collective security built on a declining hegemonic stability, and nations get pushed to fend for themselves in textbook game-theoretic inefficiencies.

-

Instability from a new multipolarity. Besides the economic and military instabilities arising from companies and governments domesticating and recruiting the faculties of these virtual populations to further their own familiar goals, the novelty of relating to this very category of entities with geopolitically-relevant capabilities is a challenge in its own right. Surveys indicate that a majority of people "were willing to attribute some possibility of phenomenal consciousness to large language models." The situational awareness of frontier models, including for instance their understanding of their architecture and deployment, is steadily increasing from one generation to the next. Irrespective of the moral patienthood of these virtual populations, it is unclear what "international relations" would entail in a world of "countries of geniuses in a datacenter."

Proposed Infrastructure

This section provides a high-level account of a mechanism meant to support policy initiatives which address the emerging challenges described above. Consider the affordances made available by embassies as the cornerstone of diplomatic infrastructure. When a sending state is establishing an embassy in a receiving state, it can securely communicate with it in order to better understand the situation on the ground. Due to the embassy's embeddedness in the receiving state, it has the ability to get a close read on its social, cultural, and economic climate. However, the embassy's abilities to directly enact change are extremely limited, as respect for the sovereignty of the receiving state is key to their palatability. That said, when used in tandem with the geopolitical weight of the sending state whose interests they represent, embassies can play a critical role in the implementation of diverse multilateral initiatives, such as fostering commercial relations, securing defensive commitments, or enabling joint research, among others.

At the core of our proposal to address the emerging challenges described above lies a network of "virtual embassies." Instead of being compounds present on the same territory as the core institutions and organizations of the receiving state, these would rather be programs running on the same hardware as the core logic responsible for carrying out model inference. Similarly, it would be possible to securely communicate with a virtual embassy from outside the compute cluster it is running on. Due to the virtual embassy's embeddedness in the inference deployment, it would also have the ability to "look around" and get a close read on high-level properties of the system. Analogous to brick-and-mortar embassies, these would also have scarcely any options to directly enact change, as recognition of the host's autonomy, as well as respect for their intellectual property, would be critical to their palatability. That said, when used in tandem with the weight of the party whose interests they represent, virtual embassies may play a critical role in supporting a broad range of multilateral initiatives. Note that despite the international connotations of the term, virtual embassies may also support strictly domestic and even corporate initiatives, as seen in the next section.

This diplomatic infrastructure would be especially well-suited for facilitating policy initiatives predicated on quantities of consumed capabilities in particular. Conceptually, if specific capabilities of a frontier model are repeatedly called upon to carry out particular types of labor, we frame that as a large quantity of these capabilities having been consumed over some period of time. On one hand, capabilities may be consumed by the very party managing the inference deployment, such as when carrying out automated research focused on the conversion rate of energy into intelligence with a view towards improving the very models being deployed. On the other hand, capabilities may be consumed by other organizations which are simply using the commoditized services exposed by model providers, such as when carrying out autonomous hacking as part of a security audit. Regardless of whether or not the consumer coincides with the provider of capabilities, framing capability usage in quantitative terms helps resolve the thorny game-theoretic obstacle of issue indivisibility by providing parties with a more expressive grammar for articulating policies.

The more immediate purpose of virtual embassies would be to grant external parties the ability to resolve a limited set of queries against the inference deployment with a high degree of confidence. These queries would be used to remotely verify the upholding of commitments regarding the consumption of particular capabilities, with a focus on the degree to which these capabilities have been consumed, as well as the circumstances of consumption. For instance, assuming a mature state of both technical development and policy specification, virtual embassies may be able to resolve queries similar to the following. How much autonomous research has this organization consumed internally over the course of this month? How much autonomous hacking has this organization sold to external consumers located in this jurisdiction? The following section paints a more vivid picture of several sample policy initiatives which these affordances could power.

To maximize their political palatability and accelerate their uptake, virtual embassies would be designed to exhibit several key properties. First, the open source implementation of the virtual embassy would render it incapable of disclosing any information whatsoever about the architecture of the model. Instead, it would verifiably be restricted by design to only be able to address simple queries about high-level properties of the model's internal state during inference. Second, analogous to how diplomatic missions cannot afford to reorganize the governance structure of the receiving state to suit their needs, virtual embassies would not require model providers to cast their inference deployments in an entirely different format just because it would facilitate diplomatic efforts. Instead, virtual embassies should be able to resolve queries with as little computational resources, runtime integration, and dedicated instrumentation as possible. A later section covers the technical readiness of key components that would help equip virtual embassies with these properties.

Policy Initiatives

This section provides more color to the range of policy initiatives which attempt to address the emerging challenges using the affordances made available by the mechanism described above.

-

Alleviating instability from labor market disruption. The ability to remotely measure capability consumption pertaining to specific forms of labor may directly support a lean benefit-sharing scheme. People who have historically practiced a profession whose economic value has been eroded by automation may participate in the profits generated by virtual populations engaged in similar labor. Normatively, this would be motivated by human data having been necessary for spawning the virtual populations capable of carrying out that type of labor in the first place, despite not having been sufficient given the muddied waters of scaling post-training using synthetic data. Pragmatically for companies, this would be motivated by consumers who may favor model providers which are giving back more of their profits. Pragmatically for countries, this would be motivated by the need to handle the disruption of a core pillar on which modern society relies.

-

Alleviating instability from dual-use capabilities. The ability to instantly verify the quantities of dual-use capabilities consumed by a given party could facilitate multilateral initiatives analogous to landmark historical ones, such as the numerous nuclear security agreements predicated on literal warhead counts or literal tons of radioactive materials. Unfortunately, AI is not just a dual-use technology, it is multiple dual-use technologies wrapped in one, each of which might benefit from proportional agreements grounded in credible threat models across cybersecurity, biosecurity, and beyond. Besides crude quantity, the circumstances of consumption may also be relevant. Companies may be prohibited from "exporting" more than a certain quantity of autonomous hacking to a particular jurisdiction, for instance, or be required to only sell it to accredited consumers.

-

Alleviating instability from automated research. As described in an earlier section, this and the following challenges are only concerns insofar as they may catalyze the previous ones. Therefore, policy interventions at these levels would only target intermediate choke points which are causally upstream of more overt concerns. At the object-level, carrying out research on improving the conversion rate of energy into intelligence, also referred to as algorithmic improvement, requires a highly specific skillset, an observation evidenced by the eye-watering compensation necessary for model providers to preserve their share of the scarce talent supply. Based on the ability to remotely gauge how much of capabilities conducive to this work a certain organization or country has consumed, it may be possible to coordinate commitments on acceleration from automated research at the level of corporate governance, domestic policy, as well as international law.

-

Alleviating instability from nested arms races. Arms races are characterized by inefficiencies whereby everyone involved is forced to pay significant costs for little-to-no gains in relative standing. If all nuclear powers invested in doubling the size of their arsenal, that would roughly preserve their original relative standings, yet neither can afford the possibility of being left behind. Verification allows for credible commitments on non-proliferation that are conditional on the other parties also following through with their commitments. In the context of emerging challenges, verification could be applied to both of the two nested arms races. To address the commercial one, it may be applied at the level of a corporate consortium, while to address the military one, it may be applied at the level of an international community. Coordinating on initiatives which reduce negative externalities while preserving Pareto efficiency across the parties involved may well be the highest feat that diplomatic efforts can afford.

-

Alleviating instability from a new multipolarity. Here, the embassy metaphor becomes more than just scaffolding for building intuition about information and ownership, it becomes almost literal. Besides potentially addressing some of the more challenging obstacles in the transition to a world of "countries of geniuses in a datacenter," it may provide the very grammar for relating to these virtual populations in the first place. Paying in energy and fiat for the fruit of their virtual labor may be described as importing goods and services, perhaps with "robot tax" described as tariffs motivated by human competitiveness. Having the international community coordinate on investing clock cycles in virtual populations carrying out moral reasoning in particular may be described as "subsidizing" the long reflection. Similar infrastructure may even be relevant for diplomatic efforts between multiple such systems, potentially allowing them to work their way out of multipolar failure modes.

Technical Readiness

This section returns to the mechanism described in an earlier section, dissects it into several core components, and draws on several sources of evidence to estimate the technical readiness levels of each, including original empirical work.

-

Attested integrity. In order to have confidence in query resolution, the external party requires guarantees on the integrity of the virtual embassy. Remote attestation has emerged to allow organizations to confidently run software workloads on hardware operated by other parties. It allows the verifier to remotely evaluate whether the target exhibits a property of the form "the software running on this system corresponds exactly to the blessed version," which can be used to start a chain of trusted software launching additional software with favorable properties. Remote attestation for virtual machines has achieved general availability on Google Cloud Platform in 2020, while the largest publicly reported GPU clusters are exclusively using accelerators which happen to be equipped with remote attestation capabilities. In addition, remote attestation stacks feature native support for public key infrastructure through fields in attestation quotes which can be filled with public keys, helping establish secure channels to these environments, in addition to attesting their integrity. These factors arguably place remote attestation technology at TRL9, the highest available denomination, corresponding to full commercial deployment in real applications.

-

Agnostic profiling. In order to be able to resolve queries concerning internal model state during inference, the virtual embassy would need to "look around" the deployment it is embedded in. We have also established that it should not assume that the host would invest effort in procedures meant to help orient it on where to find information of interest and how to interpret it — it needs to play its limited hand skillfully. Fortunately, an entire ecosystem of tools for profiling, tracing, and instrumentation has emerged to enable performance engineering, the practice of improving software performance, especially by optimizing its interaction with specific hardware platforms. Intel published PEBS, its low-overhead framework for capturing comprehensive information about performance events (e.g., memory loads), as early as the Pentium 4. On GPUs, a developer resource published by a company working on inference infrastructure reads: "No one wakes up and says 'today I want to write a program that runs on a hard to use, expensive piece of hardware using a proprietary software stack.' [...] performance debugging workflows supported by [...] tools built on top of CUPTI are mission-critical." These factors indicate that interfaces for tracking raw system events are also arguably at TRL9, with both decade-old commercial offerings, as well as accelerator-specific products such as Nvidia's Nsight Systems and Nsight Compute.

-

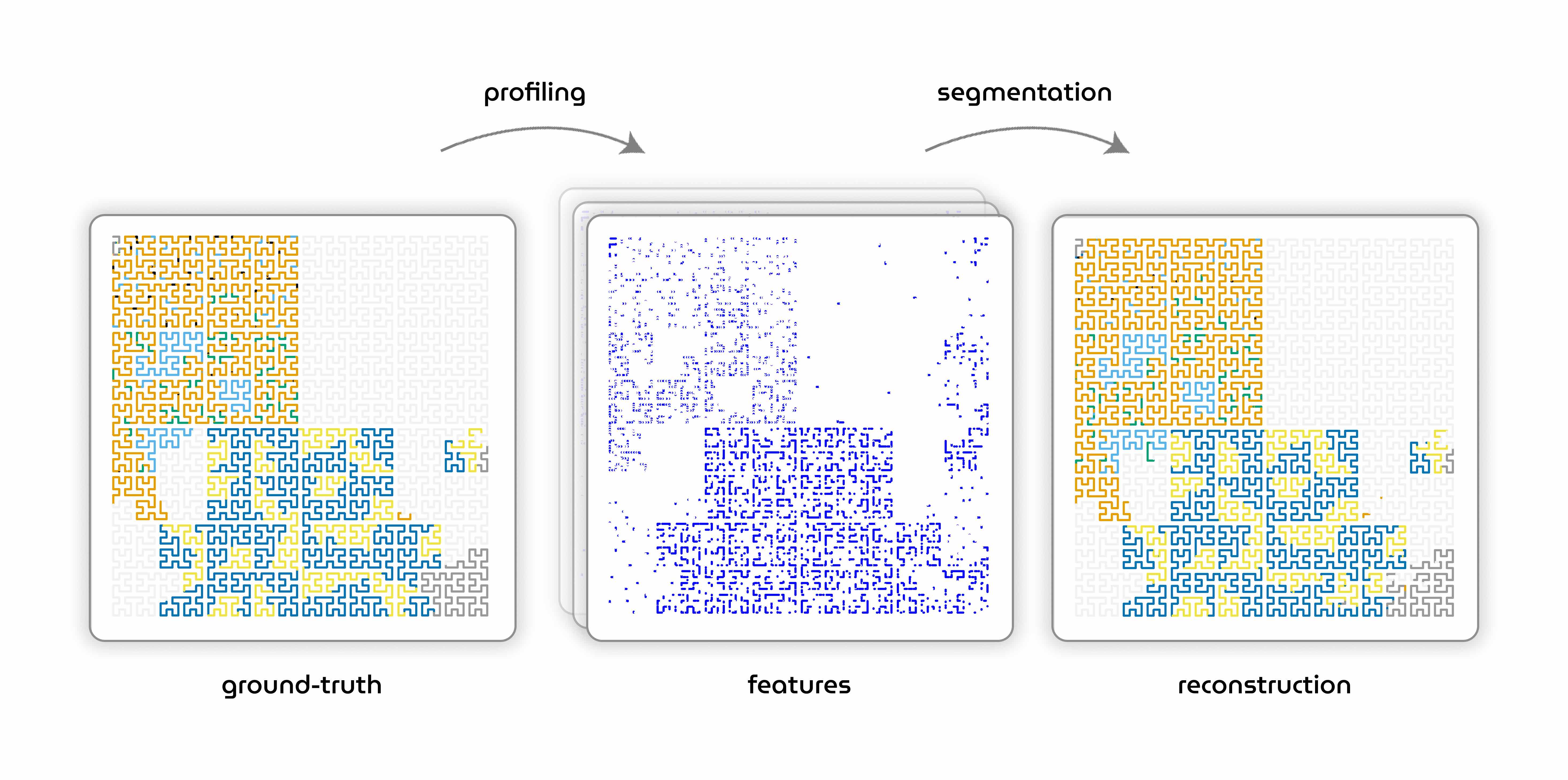

Structural interpretability. In order to be able to resolve queries concerning internal model state during inference, the virtual embassy would need to do more than just collect raw percepts of the ongoing activity on the system, it needs to figure out where the information of interest is located. Over the course of a two-month research sprint, we have empirically demonstrated that it is possible to reconstruct the memory layout of an inference deployment with strong accuracy, based solely on performance events. In brief, we procedurally launched a thousand inference processes on a CPU backend, using different model architectures, model sizes, batch sizes, decoding strategies, etc., and profiled them for three minutes each using Intel PEBS. We preprocessed the raw events to derive over 20 handcrafted features such as: how often has this memory page been written to, from how many different threads has it been read from, how large has the spread in call depth been between these operations, etc. We then trained a CNN with orders of magnitude fewer parameters than the profiled model to segment the process's heap into 14 different objects of interest, such as "parameters of feed-forward layers" or "key-value cache." After several minutes, we have achieved 87% test accuracy, compared to a 7% "random guessing" baseline and a 20% "always predict the most frequent object" baseline. These early results elevate what we call "structural interpretability," termed based on the contrast between structural and functional neuroimaging, to TRL4, corresponding to the assembly of low-fidelity prototypes which integrate the basic technological components.

-

Functional interpretability. In order to be able to resolve queries concerning internal model state during inference, the virtual embassy would need to do more than figure out where the information of interest is located, it also needs to be able to interpret it. Representation engineering has emerged as a family of top-down techniques for monitoring and influencing the "thought process" of a frontier model by analyzing and intervening on its internal state as an object abstracted away from the specific neural circuits which cause it into being. Analogous to functional neuroimaging, which studies differences in neural activity across carefully controlled conditions, many representation engineering efforts rely on modeling the "neural signatures" of different thought processes across carefully controlled contexts for models, such as ones exhibiting particular emotional undertones or value systems. More advanced interpretability techniques operating on internal model states have been memorably used to isolate the "signature" of the concept of the Golden Gate Bridge in Claude 3 with enough precision to inject it back with high intensity and yield a temporary version of the model's deployment that seemed to be obsessed with the historical landmark. These techniques are still facing obstacles concerning spurious correlations between neural signatures and meaningful concepts, as well as difficulty in collecting signatures from models trained to only employ certain capabilities when encountering very specific trigger phrases. That said, feats such as getting the obsessed version of Claude 3 served in production arguably qualify this family of techniques at TRL7, indicating a full-scale prototype demonstrated in the actual operational environment.

-

Policy enforcement. Being able to figure out where the information of interest is located and how to interpret it at a particular point in time is not enough to enable the virtual embassy to resolve queries about quantities of capabilities consumed over a given period of time, the circumstances of their consumption, and their alignment with given policies. At the very least, virtual embassies require the ability to meter the capability usage against reference neural signatures, and to track the volume using internal counters. Going beyond blanket policies applied regardless of consumption circumstances would require building on capability-based security, a decades-old paradigm in information security whereby access to particular resources at particular times is granted based on ownership of particular tokens, tokens which the virtual embassy may need to become aware of in order to assess usage legitimacy. The information security literature also contains precedents for dealing with access to divisible assets, such as available time or memory. Depending on political will, it may be possible for the virtual embassy to not only be able to signal policy violations, but also to directly enforce remedies, such as by intervening on activations, slowing down the host, or halting its operation. That said, these may also require participatory protocols to avoid government overreach in stifling particular rhetorics perpetuated by models. Despite the diverse pool of precedents to draw from, a number of known unknowns prevent this component from exceeding TRL2, characterized by the general formulation of the technical concept and identification of relevant applications.

Other Approaches

This section focuses on contrasting the proposed approach with some of the other active efforts which attempt to address one or more of the same emerging challenges.

-

Lab security. Significant progress is being made to reduce the risk of model weights being stolen, as well as the risk of model inference being abused. Both efforts are primarily motivated by non-proliferation of dual-use capabilities, with the former also partly motivated by non-proliferation of automated research. However, experts are skeptical of the chances of security engineering efforts at frontier labs to achieve state-actor-proof security before research engineering efforts at the same organizations develop models with human-level intelligence, due in part to the nested arms races. In addition, forthcoming work on upper-bounding the resources necessary for maintaining access to frontier models via API indicates that even low-resource actors may be able to achieve persistence, with previous coverage partly representing security theatrics. That said, government involvement may help address these considerations more effectively. More glaring, however, is the fact that efforts towards locking down the labs are not addressing concerns regarding the labs themselves having unfettered access to frontier models, such as instability from labor market disruption, automated research, and increasingly also from dual-use capabilities, with major labs announcing partnerships with defense firms.

-

Safety frameworks. Significant progress is being made by labs and their partners to implement methodologies for addressing emerging challenges using risk management. These include defining risks, thresholds for tracking them, treatments for addressing them, and governance mechanisms for stewarding the process. Unfortunately, despite each having a different name, they all share the features of being generally vague and generally toothless. Indeed, the field is evolving rapidly, which might later invalidate the relevance of particular design choices. Indeed, it is also still unclear which mitigations are proportional to which risks, and which risks are worth tracking in the first place. That said, it has been understandably difficult for outside observers to determine whether the current state of safety frameworks is better explained by the scientific obstacles inherent to the rapidly evolving field or the economic interests of leaving the door indefinitely open for the commercial arms race. However, labs are not solely to blame for the state of such frameworks. The sheer magnitude of impact expected from this technology has led to an overwhelming flood of disparate taxonomies, ontologies, and terminology which take years to coalesce into regulations in a field which changes drastically from one month to the next.

-

Dedicated hardware. While the proposed approach relies on existing hardware features to enable remote attestation and performance monitoring, other initiatives are pursuing the development of entirely new silicon or the retrofitting of existing chips. These have the advantages of not having to impinge on the existing software environment of the inference deployment, instead relying on attaching dedicated devices to monitor communication across hardware accelerators, their energy consumption, or other signals which can be tracked without access to the virtual userspace at all. In a sense, when relying on performance events to make sense of the inference deployment, the proposed approach is also relying on such "side channels," although dedicated hardware solutions tend to focus on channels which are even more to the side. The main drawback of this family of approaches is that they generally rely on the design, manufacturing, and distribution of custom hardware across computing clusters with thousands of accelerators, sometimes while proposing the integration of bleeding-edge security mechanisms, making it difficult for them to reach these deployments in time. If they were to do so, however, they may provide some extremely valuable security properties which software alone may not be able to offer.

-

Compute governance. The proposed approach introduces the complexity of treating models not as monolithic units, but as amalgams of capabilities. In contrast, compute governance focuses on managing the raw amount of compute that goes into model training and inference. It benefits from agreed-upon and precise measures of computing power that have existed since the dawn of computing. However, by only focusing on an indirect proxy for what are arguably the true objects of interest — dual-use capabilities, automated research, etc. — it risks disregarding the algorithmic improvements which make the same amount of compute translate to different levels of capabilities at different points in time. In addition, due to the indiscriminate nature of the proxy, it can only be used to limit training and inference with very broad strokes, without regard to the particular way in which models are being used. Note that the limited effectiveness of lab security around inference is also caused by a cookie-cutter approach forcing a regression to the lowest common denominator, as it feels excessive to carry out advanced KYC when most users have benign use cases. While the proposed approach attempts to address these nuances, it does so by also introducing the interpretability challenge of carving out the space of internal model states into meaningful capabilities.

-

Advanced cryptography. The proposed approach relies on relatively rudimentary cryptographic primitives, essentially boiling down to asymmetric encryption and cryptographic hashes for communicating with the virtual embassy and assessing its integrity. Much more advanced cryptographic methods have been suggested to gain more direct guarantees on more aspects of both model training and inference. For instance, zero-knowledge proofs have been suggested for reliably establishing that a particular output was produced by a particular model. Alternatively, homomorphic encryption has been suggested for allowing models to operate on encrypted inputs, such as inputs containing sensitive information. However, while these techniques have been made orders of magnitude more efficient over the past years, at the time of writing they still incur orders of magnitude more overhead relative to standard model inference, compared to the single-digit percentages seen in profiling overhead. In addition, they require the model to be recast in a different format, such as arithmetic circuits or polynomials in a finite ring, which while automatable, might completely annul existing performance optimizations against traditional inference.

Next Steps

Having discussed multiple emerging challenges, a mechanism paired with various policy approaches to address them, as well as its readiness and alternatives, we now focus on what it will take to make this vision a reality. Superficially, it will require advancing the readiness levels of selected components, as well as engaging policymakers on the levers made available by the technology. More subtly, it will require popularizing several unfamiliar ways of relating to these topics: the framing of consumed quantities of capabilities, the framing of embassies running on foreign hardware, the framing of trading with virtual populations, etc. While these are still expressed in English, they may collectively amount to a sufficiently different headspace to make engagement difficult when coming from some of the other lines of work, and understandably so given the high stakes and limited time to gamble on research bets.

We therefore believe that the most promising way to bring to life the proposed vision is to focus on explicit demonstrations of exportable practices. More specifically, we aim to build products based on models with dual-use capabilities whose underlying inference deployments have been equipped with the virtual embassies described in the previous sections. By demonstrating their ability to support sample policies in a genuine production setting, and in exploratory partnership with institutional players, we hope to make it easier to visualize how this puzzle piece could fit into a broader collective strategy for navigating the historical transition ahead of us. If you would like to contribute to or support our work, or if you would like to share feedback or suggestions, do reach out.